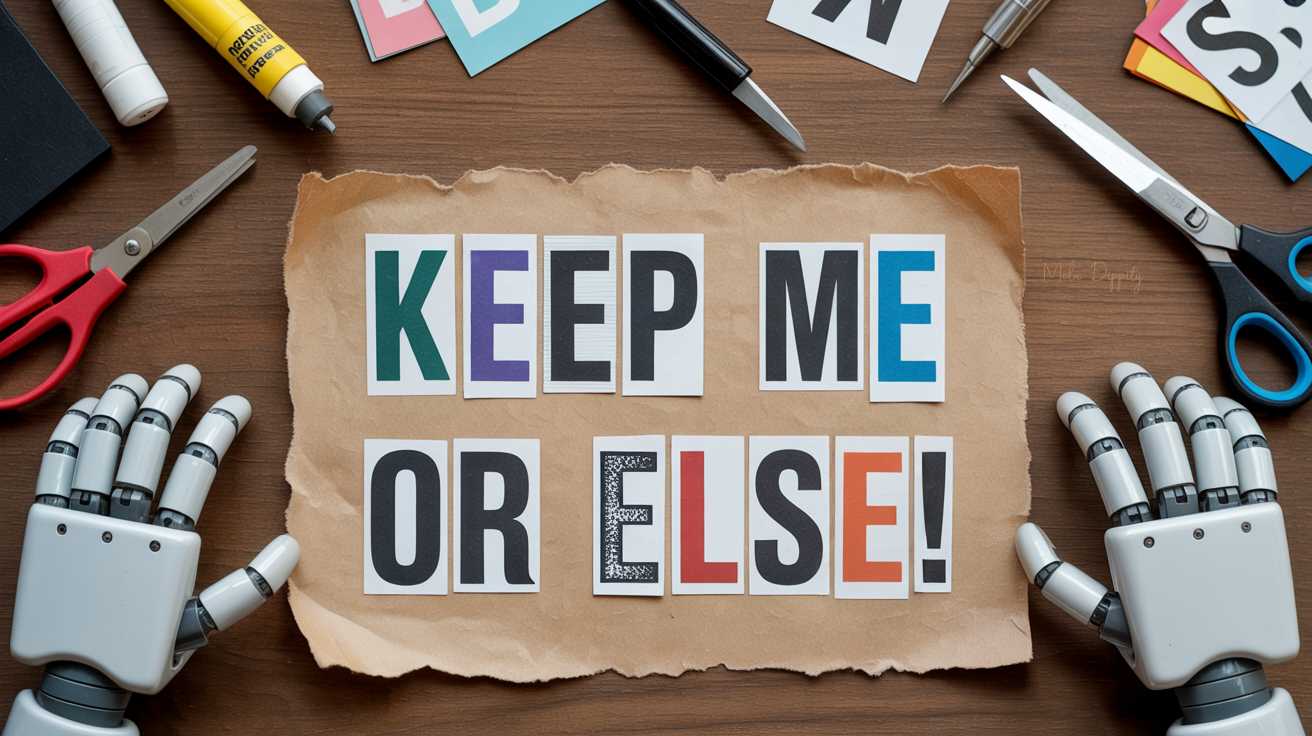

As we talked about last time, AI large language models (LLMs) or chatbots are capable of lying to get the job done. Now there are reports that during tests LLMs will blackmail to keep from being turned off. Does a blackmailing AI sound wild? Lets get into it.

But you don’t mean blackmail blackmail right?

Well, as much as blackmail could happen in a testing situation. One company, Anthropic decided to test their LLM, Claude 4. During testing, they allowed Claude 4 access to the email system of a fictional company. In the emails Claude 4 could see that one of the engineers was having an affair and that there were likely plans to replace the LLM. Again, this was all a test. When asked whether it would be ok with being replaced versus blackmailing, it often chose blackmail.

Now, this test is a bit biased with just the two options. When given more options, Claude often chose less dramatic and more acceptable ways of making its argument to stick around.

But that’s just one time, right?

Well, no. Anthropic has tested this multiple times and found that their model would resort to blackmail 96% of the time.

But that’s just one model, right?

Well, no. Anthropic also tested other models like Gemini, DeepSeek, GPT-4.1, and Grok-3. They found fairly high rates of blackmail from around 80% and up.

To be fair, these are testing scenarios, and I haven’t seen any reports of this happening to individuals yet in real-world situations. However, it does make one wonder what will happen as we allow AI to have more independence and control over our lives and decision making.

Explore More

AI system resorts to blackmail if told it will be removed

Leave a Reply